Why is computer?

Many people start learning to program in order to understand computers better. The first step is to choose a programming language, and they often then find themselves caught up in debates about which language is best for beginners.

However, this misses the point. In order to understand computers, we must first consider why humans attempted to build calculating machines using gears and connecting rods in the first place.

The "Thinking" Machine

Babbage was motivated to create error-free logarithms for the natural numbers tables because human computers were known to make mistakes. He designed the Difference Engine to automate the production of these tables. He later conceptualised the Analytical Engine, a programmable mechanical computer capable of performing any calculation when given the right instructions. And the Analytical Engine was a Turing-complete mechanical computer before the Turing machine. It has almost all the components of a modern computer.

iFrame

Ada Lovelace is widely regarded as the "first programmer", having created the first algorithm intended for use with the Analytical Engine, long before computers were invented.

Let's put history to one side for the moment and consider the fundamentals of computation. Like mathematicians, we use formal systems to describe and explain computational processes.

- A set of symbols

- A set of axioms (initial strings of symbols)

- A set of rules for manipulating the symbols (productions)

For example, we can define a formal system for addition as follows:

- Symbols: ●, +, →

- Axioms: ● + ● → ●●

- Rules: If you have an expression of the form A + B → C, you can add another ● to both sides: A + B + ● → C●

Or you can using a different set of symbols and rules:

- Symbols: ⭐️, +, =

- Axioms: ⭐️ = ⭐️

- Rules: If you have an expression of the form A = B, you can add ⭐️ with a connecting + to left side and directly add ⭐️ to right side: ⭐️ : A + ⭐️ = B⭐️

Both systems can be used for addition, but they may use different symbols and rules. This demonstrates that computation is not dependent on particular symbols or representations, but rather on the underlying structure and rules of the system.

The number of symbols on the right-hand side of the equation represents the sum. Numbers such as one, two and three simply represent the quantity of symbols and do not affect the computation itself. We assign meaning to these labels in our minds; for example, it could be two stars or four apples. The symbols themselves have no inherent meaning.

Mathematics is a more complex and useful formal system than the simple addition system described above.

Turing and Computability

The lambda calculus, also written as λ-calculus, is a formal system for expressing computation based on function abstraction and application, involving variable binding and substitution. It was introduced by Alonzo Church in the 1930s.

In 1936, Alan Turing introduced the concept of the Turing machine. This comprises an infinite tape divided into cells, a tape head that can read and write symbols onto the tape, and a set of rules that determine how the machine behaves based on its current state and the symbol being read.

The Halting Problem, which concerns the question of whether a given program will eventually stop running or continue indefinitely. Turing proved that there is no general algorithm that can solve this problem for all possible programs.

This proves that not all problems can be solved using a computer. There are two categories of problem. The first comprises those that can be solved by a Turing machine or algorithm. Examples include basic arithmetic operations, as well as sorting and search algorithms. The second set comprises 'uncomputable' problems, meaning that no Turing machine or algorithm can solve them.

Building a Turing Machine

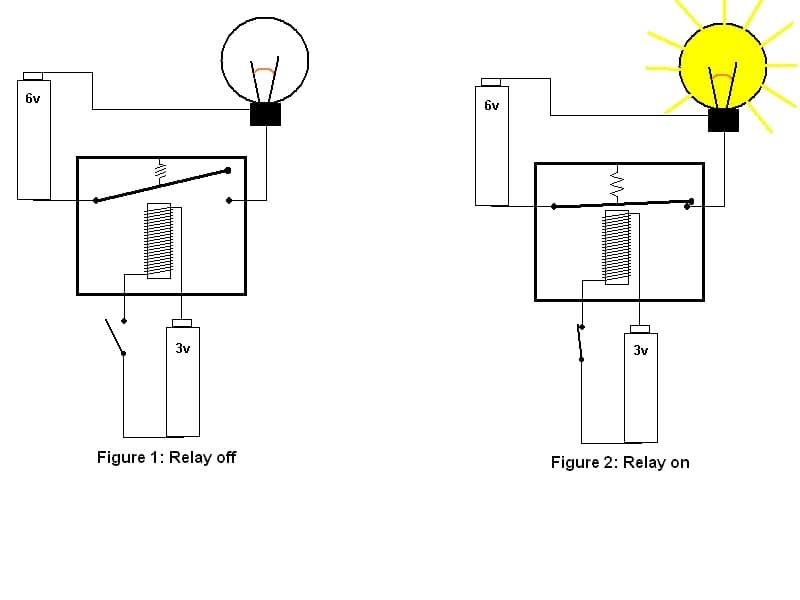

Could such a machine be built from scratch? We could use light bulbs to represent the on/off states and control them using a relay. Then, we could change the state of the machine by switching the relays on and off.

While it is possible to build a Turing machine in this way, it is not practical. This is because it would require a large number of relays and light bulbs, meaning it would be very bulky and consume a lot of power. Indeed, the first computers were built using vacuum tubes and occupied multiple floors of a building.

Semiconductor and Transistor

As technology advanced, we discovered new materials, such as semiconductors, which enabled us to build transistors. Transistors are much smaller and more efficient than relays, allowing us to build smaller yet more powerful computers.

By adding impurities to silicon, we can create regions with different electrical properties. This allows us to produce components such as diodes and transistors, which act as switches that control the flow of electricity.

A PN junction is the boundary between two types of semiconductor material: P-type and N-type. P-type material has an abundance of holes (positive charge carriers), whereas N-type material has an abundance of electrons (negative charge carriers). When these materials are joined, a depletion zone forms at the junction where the holes and electrons recombine, creating an area with no free charge carriers. This depletion zone acts as a barrier to the flow of electric current.

Applying an external voltage to the PN junction alters the width of the depletion zone, thereby controlling the flow of current through it. This fundamental principle lies at the heart of transistor operation, and transistors are essential components in modern electronics.

Let's build a component that implements the Boolean algebra inverter, i.e. ¬0 = 1 and ¬1 = 0. The input is connected to the control gate (G) of the transistor. When the input is high (1), a conductive channel forms between the source (S) and the drain (D). This allows current to flow from the drain to the source, resulting in a low output of 0. Conversely, when the input is low, the channel does not form, meaning that current cannot flow and the output is high.

We can also join two transistors together in a parallel configuration to create a more complex logic gate, such as an OR gate.

There are many different types of logic gate, including the AND, NAND, NOR and XOR gates. You can find out more in this excellent video.

iFrame

We can use logic gates to add two single-digit binary (1-bit) numbers together. If we also consider the carry bit from the previous addition and output the carry bit for the next addition(Full-adder), we can add multi-bit binary numbers together. Combining many of these adders creates an arithmetic logic unit (ALU), which can perform various arithmetic and logical operations. The ALU is a fundamental building block of a computer's central processing unit (CPU).

Computers use binary digits, or bits, to represent data because two states (on/off, true/false, 1/0) can easily be represented using physical components such as transistors. This makes designing and building reliable digital circuits that can perform complex computations easier. You can read more about how signed numbers are represented using two's complement here.

If you are interested in how information is generally encoded, transmitted and decoded, you can read about Information Theory

However, it is difficult to input instructions and data into a computer using only 1s and 0s. Therefore, hexadecimal (base 16) is usually used to represent binary numbers in a more compact and human-readable form. Instructions are written in assembly language, which uses mnemonics such as ADD, SUB and MOV to perform mathematical operations and save values to storage (registers) instead of binary codes. For example:

ADD 2, 3; MOV R1, 5; SUB R2, R1So-called "high-level programming languages", such as Python, JavaScript and C++, are designed to be more human-readable and easier to write than assembly language. A compiler or interpreter then translates these into machine code so that the computer can execute them.

You now have a complete understanding of what a computer is and how it works. It is a machine that performs computations by manipulating symbols according to a set of rules. These symbols have no inherent meaning, but we assign meaning to them in our minds. Computers are built using physical components, such as transistors, and physical processes are ultimately involved in computation.